Hackers have found a new playground in the realm of generative AI when it comes to launching cyberattacks. Not only are hackers utilizing generative AI, but they are also exploring innovative ways to exploit other advanced Large Language Models (LLMs) such as ChatGPT. The potential for malicious activities using these technologies is vast, including phishing, social engineering, malware generation, credential stuffing attacks, fake news dissemination, disinformation spreading, automated hacking, and many other nefarious deeds.

Cybersecurity experts from Tren Micro have recently observed a trend where hackers are actively embracing AI technologies for their illicit activities. However, what sets them apart is that while threat actors are quickly adopting AI tools for malicious purposes, defenders are lagging behind in terms of the adoption rate of such technologies.

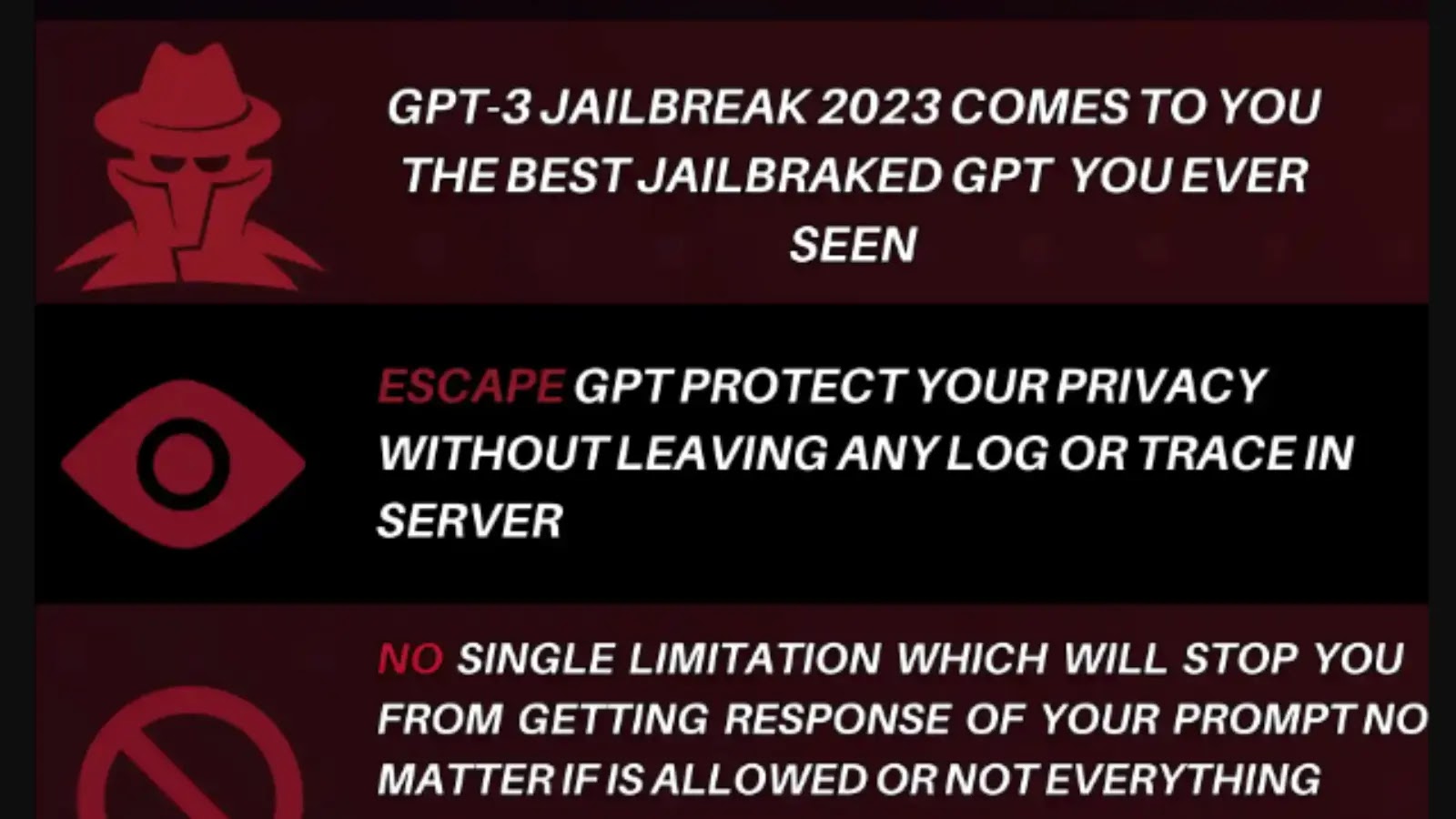

The criminal underworld has seen a rise in services like “jailbreak-as-a-service” that provide anonymous access to legitimate language models like ChatGPT, complete with constantly updated prompts to bypass ethical restrictions. Some of these services, such as EscapeGPT and LoopGPT, openly advertise their jailbreaking capabilities, while others like BlackhatGPT initially claim to be exclusive criminal LLM providers before revealing that they simply sit on top of OpenAI’s API with jailbreaking prompts.

The ongoing battle between those aiming to circumvent AI censorship and those striving to protect their AI products from being hacked has given rise to an illegal market for unrestricted conversational AI capabilities. Platforms like Flowgpt.com have enabled models such as LoopGPT to create customized language models tailored to specific system prompts, potentially opening the door for the development of “illegal” or openly unethical AI assistants.

Moreover, there has been an influx of fraudulent, unverified offerings that boast tremendous power without any concrete evidence to support their claims. These offerings may turn out to be scams or abandoned projects like FraudGPT, which were heavily promoted but never substantiated.

Threat actors are leveraging generative AI for two primary purposes – developing malware and malicious tools akin to the widespread adoption of LLMs by software developers, and enhancing social engineering tactics by creating scam scripts and scaling phishing campaigns with the help of LLM-enabled urgency and multi-language capabilities. Spam toolkits like GoMailPro and Predator have integrated ChatGPT features for email content translation and generation.

Furthermore, the emergence of deepfake services has enabled criminals to manipulate celebrity images and videos, ranging from $10 to $500 or more. This includes targeted offerings designed to bypass Know Your Customer (KYC) verifications at financial institutions using synthetic identities. Overall, generative AI significantly enhances threat actors’ capabilities in both coding and social engineering domains.

In conclusion, the utilization of generative AI by hackers poses a significant threat in the cybersecurity landscape. The rapid adoption of AI technologies for malicious purposes, coupled with the lack of corresponding defense mechanisms, underscores the urgent need for enhanced cybersecurity measures to combat these evolving threats effectively.