Regulators are grappling with the rise of AI-generated deepfakes, using existing fraud and deceptive practice rules to combat their misuse. As the technology behind deepfakes becomes increasingly sophisticated, agencies like the Federal Trade Commission (FTC) and Securities and Exchange Commission (SEC) are employing creative solutions to address the risks posed by these deceptive videos.

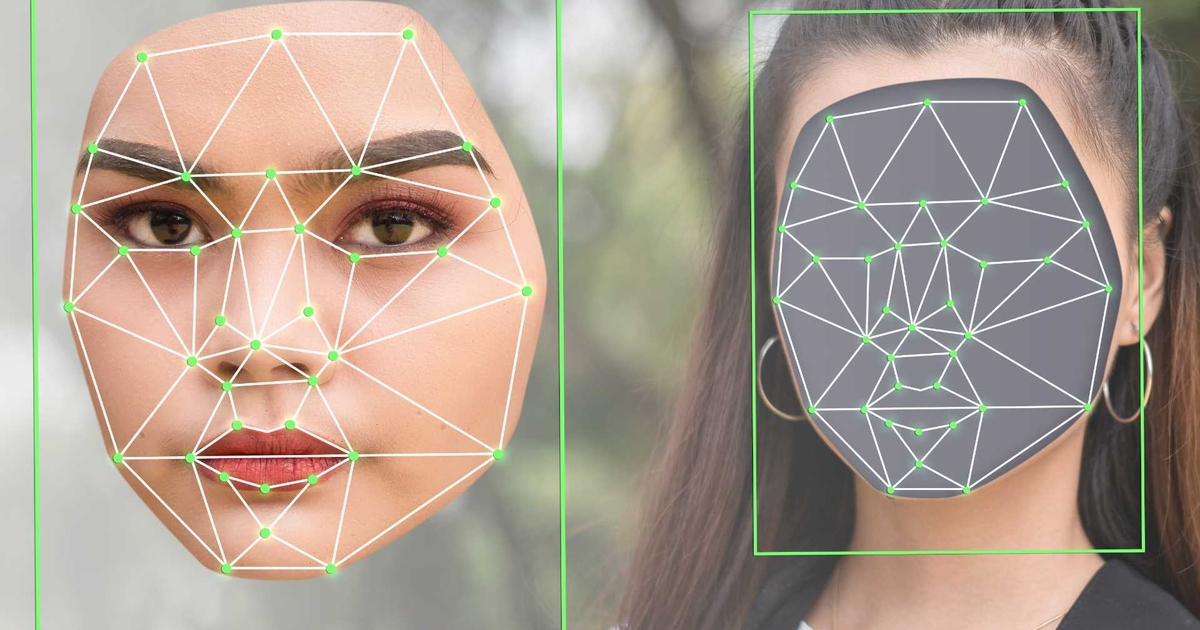

The sheer quality of AI-generated deepfakes is mind-boggling, leading to a reality where visual evidence can no longer be fully trusted. According to Yu Chen, a professor at Binghamton University, the development of tools to differentiate between authentic images and deepfakes is an ongoing process. However, even when individuals are aware that an image or video is fake, there are still significant challenges in combatting the spread and impact of this technology.

Lina M. Kahn, the chair of the FTC, made it clear that using AI tools for fraudulent or deceptive purposes is illegal. In her statement in September, Kahn emphasized that AI-powered deception falls under the purview of existing laws, and the FTC is committed to pursuing those who engage in artificial intelligence fraud.

The use of deepfakes for corporate fraud and misleading practices has also raised concerns. For instance, creating a deepfake of an executive making false announcements about a company’s actions could lead to stock market manipulation. If such deepfakes are used to deceive investors, the SEC may intervene and take legal action against those responsible.

Joanna Forster, a partner at Crowell & Morning and former deputy attorney general for Corporate Fraud in California, notes that fraudulent intent is a key element in cases involving deepfakes. The proactive stance taken by agencies like the FTC highlights the importance of addressing this issue swiftly and effectively.

While there are some state-level laws addressing deepfakes and privacy concerns, there is a lack of clear federal legislation on enforcement. Recent legal challenges, such as the injunction against a California law targeting election-related deepfakes, underscore the complexities surrounding regulating these deceptive practices at a national level.

Privacy and accountability challenges associated with deepfakes extend beyond legal frameworks. Debbie Reynolds, a privacy expert, emphasizes the difficulty in tracing the origins of deepfakes online, making it challenging to hold creators accountable. Edward Lewis, CEO of CyXcel, highlights the need for organizations to implement robust AI governance policies to address the risks posed by deepfakes and other AI-generated content.

As regulators navigate the evolving landscape of AI-generated deepfakes, proactive measures such as AI governance policies and continuous monitoring will be crucial in mitigating risks and maintaining trust in the digital realm. While federal legislation specific to deepfakes may be lacking, the concerted efforts of regulatory bodies signal a commitment to combating deceptive practices in the age of advanced artificial intelligence.