YouTube found itself in a debacle last week when fraudulent artificial intelligence-generated videos impersonating their CEO Neal Mohan started circulating. These deepfake videos were distributed as private messages to content creators on the platform with malicious intentions to deceive, install malware, and steal user credentials.

In response to this alarming situation, YouTube issued a statement alerting users about the existence of these deceptive videos. The platform emphasized that they would never communicate important information through private videos and provided guidance on how to identify and avoid such scams.

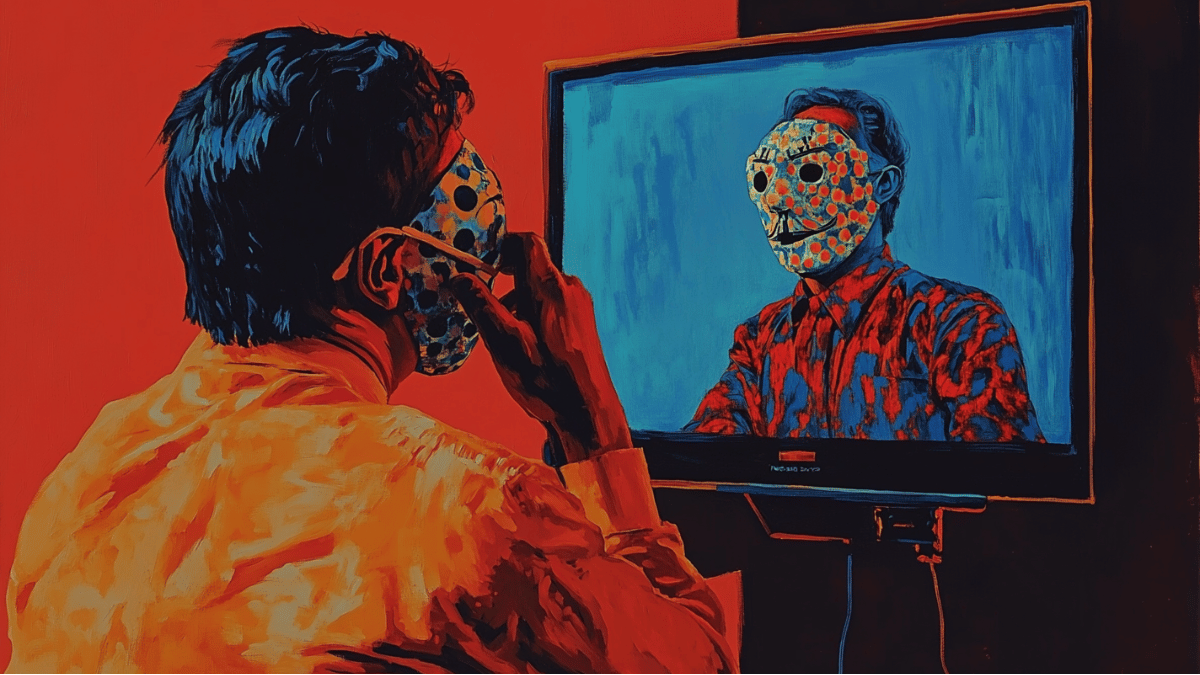

The targeted users received emails that appeared to be from YouTube, indicating that a private video had been shared with them. The video featured a highly realistic deepfake of Neal Mohan, accurately mimicking his appearance, voice, and mannerisms. The video instructed users to click a link redirecting them to a page where they were prompted to confirm their understanding and acceptance of updated YouTube Partner Program terms by signing into their accounts. However, this page was a decoy designed to capture users’ credentials for fraudulent purposes.

This incident highlights a concerning trend in the realm of social media scams. While platforms like LinkedIn and Facebook have been targeted by scammers in the past, the use of AI technology to enhance the sophistication and credibility of attacks is a new and troubling development. According to the recently released AI Safety Report, there is a growing prevalence of AI-generated content online, with a significant percentage of users encountering deepfakes in various forms over the past six months.

To address the challenges posed by AI-generated fake content, techniques like watermarking have been employed to verify the authenticity of digital media. However, sophisticated adversaries are finding ways to circumvent these measures, underscoring the need for enhanced security protocols.

Industry experts have weighed in on the YouTube incident, emphasizing the inadequacy of traditional threat-prevention methods in combating modern AI-driven attacks. They call for the adoption of AI-powered tools to provide real-time visibility and alerting, as well as machine-driven response mechanisms to swiftly counter emerging threats.

As deepfake technology advances and AI-generated content becomes increasingly indistinguishable from genuine material, individuals, organizations, and governments must equip themselves with the necessary tools to combat this evolving threat effectively. Educational initiatives and informative resources play a crucial role in raising awareness and combating the spread of deepfakes in the digital landscape.