A recent analysis conducted by software supply chain security company Rezilion has revealed that integrating generative AI and other artificial intelligence applications into existing software products and platforms poses significant security risks for organizations. While there is a growing interest in utilizing these AI projects, they are still relatively new and immature in terms of security.

One of the main concerns highlighted by Rezilion’s analysis is the security of projects using GPT 3.5 on GitHub. Since ChatGPT’s launch earlier this year, there are now over 30,000 open source projects integrating this technology. This raises questions about the security measures in place for these projects.

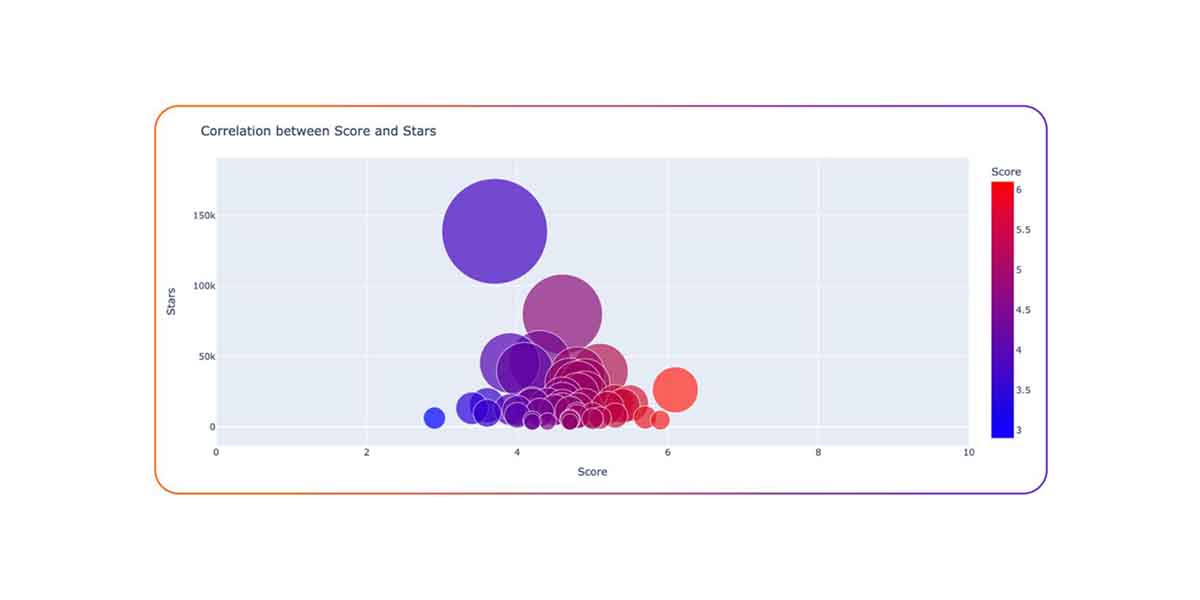

To address these concerns, Rezilion’s team of researchers analyzed the 50 most popular Large Language Model (LLM)-based projects on GitHub. The projects’ security posture was evaluated using the OpenSSF Scorecard score, which assesses factors such as vulnerability count, code maintenance, dependencies, and the presence of binary files. The researchers found that none of the analyzed projects scored higher than 6.1, indicating a high level of security risk. The average score was 4.6 out of 10, highlighting the presence of numerous issues. Even the most popular project, Auto-GPT, had a score of 3.7, making it a particularly risky project from a security perspective.

When organizations consider integrating open source projects into their codebase, they typically take into account factors such as stability, support, and active maintenance. However, there are additional risks that need to be considered, including trust boundary risks, data management risks, and inherent model risks.

The researchers from Rezilion emphasize the importance of understanding the project’s stability and long-term maintenance. New projects often experience rapid growth in popularity before reaching a peak in community activity as they mature. It’s crucial to assess whether a project will continue to evolve and receive maintenance in the long run.

The age of the project plays a significant role in its security posture. The majority of projects analyzed were between two and six months old. When considering both the age and Scorecard score of the projects, the most common combination was projects that were two months old and scored between 4.5 and 5 on the Scorecard.

Rezilion’s researchers note that newly established LLM projects tend to achieve rapid success and gain popularity. However, their Scorecard scores remain relatively low, indicating ongoing security vulnerabilities.

In light of these findings, development and security teams should carefully evaluate the risks associated with adopting new technologies. Prioritizing thorough evaluations can help identify potential security issues and mitigate risks before incorporating AI projects into existing software products and platforms. It’s essential to prioritize security in the development and integration of these projects to protect organizations from potential cyber threats.